see what's on the agenda

Explore our interactive agenda to discover session topics and times, speaker backgrounds, and complete details for all scheduled activities.

- Deploy

- Catalyst

Tap on event cards for more details

Breakfast - on Patio/Common Areas

8 AM > 9 AM (PST)

You can write code faster. Can you deliver it faster? by Hans Dockter, Gradle

9 AM > 9:30 AM (PST)

Generative AI is redefining how code gets written—faster, more frequently, and at greater scale. But your delivery pipeline wasn’t built for this. The more code GenAI produces upstream, the more pressure it puts on downstream systems: build, test, compliance, and deployment all become bottlenecks. Without significant change, GenAI won’t accelerate delivery—it’ll break it.

This talk explores how GenAI is increasing code volume, reducing comprehension, and encouraging large, high-risk batches that overwhelm even mature CI/CD systems. The result? Slower feedback loops, more failures, and mounting friction between experimentation and enterprise delivery.

To break this cycle, pipelines must significantly improve their performance, troubleshooting efficiency, and developer experience. A “GenAI-ready” pipeline must handle significantly more throughput without compromising quality or incurring unsustainable cost.

This isn’t just a matter of scaling infrastructure. It demands smarter pipelines, with improved failure troubleshooting, intelligent parallelization, predictive test orchestration, universal caching, and policy automation working in concert to eliminate wasted cycles, both in terms of compute power and developer productivity.

Crucially, these capabilities must shift left into developers’ local environments where observability, fast feedback, and root-cause insights can stop incorrect, insecure, and unverified code before CI even begins.

The future of delivery isn’t just faster. It’s smarter, leaner, and built to scale with GenAI.

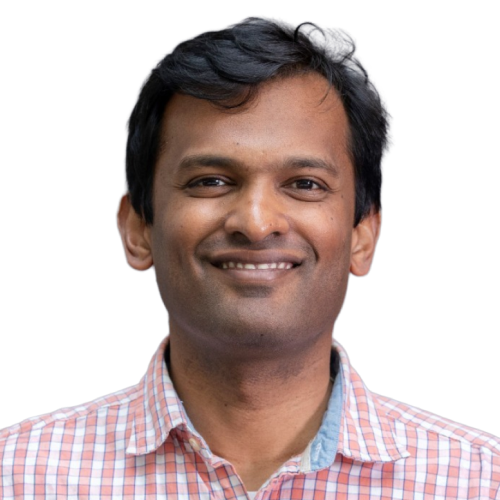

Beyond the Commit: A Fireside Chat on AI and Developer Productivity by Brian Houck, Microsoft and Nachiappan Nagappan, Meta

9:30 AM > 9:50 AM (PST)

Join Brian Houck (Microsoft) and Nachiappan Nagappan (Meta)—two of the most influential voices in Developer Productivity—for a rare and insightful fireside chat. Drawing on decades of research and industry experience, Brian and Nachi will explore the evolving landscape of Developer Productivity metrics, the transformative role of AI across the entire software development lifecycle (far beyond code generation), and where they would invest—with no budget constraints—to drive the next wave of innovation in Developer Productivity Engineering in the era of generative AI.

Optimizing for Time: Dark Matter by Karim Nakad, Meta

10 AM > 11 AM (PST)

Much of the work in the productivity space is focused on build speed or tooling improvements. Although beneficial, there is a larger opportunity available: Non-coding time.

Standup updates, XFN alignment, chat, email, task management. All of these constitute the “Dark Matter” overhead that engineers have to deal with on a daily basis.

We’ll dive into how Meta measures time, many of Meta’s top-line velocity metrics, and even some insights you can implement today, in order to help your engineers be more productive.

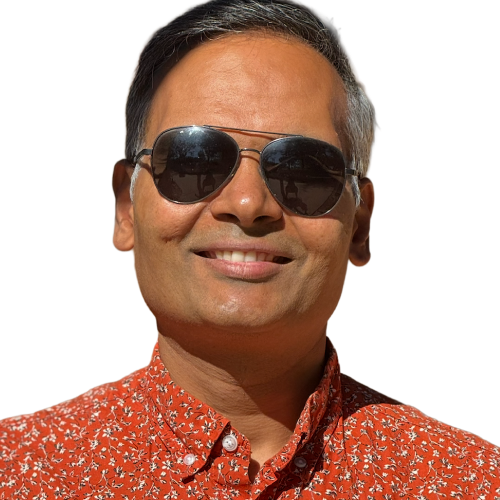

Salesforce’s AI journey for Developer Productivity: From Single Tool to Multi-Agent Development Ecosystem by Venkad Dhandapani, Salesforce

11 AM > 12 PM (PST)

Salesforce’s AI journey for developer productivity began in early 2023 with a single tool that demonstrated 30+ minutes of daily time savings. The journey accelerated through 2024-2025 with the introduction of Model Context Protocol (MCP) exchanges, AI Rules systems, and specialized tools for code reviews and ambient task agents for automated testing. Today’s ecosystem features multi-tool and multi-agent experience for Developers. This transformation represents a shift from single-tool adoption to an orchestrated multi-agent development environment that amplifies human creativity while maintaining enterprise security and compliance standards.

Lunch - on Patio/Common Areas

12 PM > 1 PM (PST)

From Lag to Lightning: Transforming Dependency Update Timelines by Roberto and Aubrey, Netflix

1 PM > 2 PM (PST)

Discover how we transformed our dependency update process that led to the latest dependency versions undergoing zero-touch deployment in hours (rather than days or weeks) across thousands of repositories. This talk will highlight our innovative use of dependency management resolution rules, automated SCM changes, and tracking them with post-deployment artifact observability tools, showcasing the efficiency and speed achieved. We’ll also touch on future advancements, including language-agnostic tooling and proactive security measures, offering insights into maintaining robust and secure software delivery.

How might we improve the impact of AI on developers’ productivity? by Elise Paradis, Google

2 PM > 3 PM (PST)

In this presentation, I will discuss some of the findings from two different research projects I led at Google, both of which aim to answer the question: “How might we improve the impact of AI on developers’ productivity?”

We have promised to our developers that AI could make them 20-55% faster when coding; but what happens if developers do not use AI? I will cover some of the things I learned about the characteristics of developers who are faster with AI, and those who are more likely to use AI, as well as some of the things I learned about what AI feature characteristics impact developers’ AI use.

The Critical Role of Troubleshooting in an ML-based development process by Laurent Ploix, Gradle

3 PM > 4 PM (PST)

This talk explores the critical role of troubleshooting in modern, ML-driven software development. We first look into traditional DORA metrics and their valuable insights. Nevertheless, their inherent lag and delayed feedback loops present challenges for effective optimization. The presentation introduces “Local DORA” metrics—such as Time To Restore (TTR) a local or a Pull Request failing build— as more actionable proxies that provide immediate feedback, enabling organizations to react swiftly to issues. In particular, optimizing local TTR is paramount for accelerating development speed. The talk will then address the dual impact of AI/ML on troubleshooting: while ML-generated code can complicate debugging issues, AI tools, such as those in Develocity, can significantly enhance troubleshooting capabilities, shorten feedback loops, and therefore improve development efficiency.

Lightning Talk: Beyond the Keyboard: Reclaiming Idle Time for Developer Productivity by Shivang Shah, Square

4 PM > 4:20 PM (PST)

Modern developer machines are powerful—but underutilized. Between meetings, context switches, and breaks, they sit idle for hours each day, waiting for the next command. In this talk, I’ll introduce MDX Daemon, a lightweight background service we built to turn idle time into real productivity. I’ll share how we used it to boost Gradle configuration cache hit rates, reduce build times, and unlock proactive workflows—without changing how developers work. If you’ve ever said “we’d do this if we had time,” this talk is for you.

Lightning Talk: Measure, Don’t Guess: Observability as the Key to Performance Tuning Software Delivery by Trisha Gee, Gradle

4:20 PM > 4:40 PM (PST)

The golden rule of application performance tuning: measure, don’t guess. Yet when it comes to developer productivity, too many teams still guess. Builds are slow, tests are flaky, CI feels overloaded—and the default response is to throw hardware at the problem or hope it goes away.

In this talk, we’ll apply the performance engineering mindset to developer experience, showing how observability data from Develocity can profile builds and tests just like applications. By measuring and optimizing build and test performance, teams directly improve the DORA metrics that matter: shorter lead time for changes, lower change failure rates, faster recovery, and higher deployment frequency.

Developer productivity is a performance problem. If you want faster delivery and happier developers, the path is the same as for applications in production: measure first, then optimize.

Lightning Talk: Unleashing Developer Productivity: Supercharging Zero Day Cloud-Native Developer Experience from IDE by Muktesh Mishra, Adobe

4:40 PM > 5 PM (PST)

You invest your time and effort in writing beautiful code, then deploy that somewhere in the cloud using containers, Kubernetes, Argo Pipelines, and whatnot. It is all fine and dandy from this point on. Too good to be true? Of course!

However, for a developer using multiple technologies can be tricky as they need to go to different places to get the work done.

This session is about how we can improve the developer experience by driving all actions (Code, test, deploy, monitor, debug, and fix) from the Integrated Developer Environments itself, thus shifting to left to get instant feedback.

Through a series of code snippets and a custom IDE extension, we will introduce the methods to increase developer productivity from day zero with everything available in one place related to code, testing, deployment, monitoring, and troubleshooting.

Measuring the Impact of AI on Developer Productivity at Meta by Pavel and Payam, Meta

5 PM > 6 PM (PST)

With AI adoption at the forefront of developer productivity investments across the industry, the question of how to measure ROI is dominating discourse. At Meta, we’ve established a comprehensive suite of metrics that measures the impact of AI on developer productivity.

In this session, we’ll describe the extensive telemetry we have created to support this, explain how to handle complex source control operations, and share directional correlations with more classic developer productivity metrics.

By the end of this talk, you will understand how Meta has approached AI driven developer productivity all the way from adoption to the value it adds to our business.

Happy Hour - on Patio/Common Areas

6 PM > 8 PM (PST)

Breakfast - on Patio/Common Areas

7:45 AM > 9 AM (PST)

What Makes a Great Developer Experience? by Max Kanat-Alexander, Capital One

9 AM > 9:20 AM (PST)

Having a great developer experience boils down to three key points: speeding up iteration cycles, improving developer focus time, and reducing cognitive load. But what does that really mean, practically, in an actual business? What do you really _do_ to accomplish those things? And how do you overcome all the technical and human barriers to solve it? I can’t tell you all the answers in 30 minutes, but I think you’ll be surprised at how much we _can_ cover about the most important pieces, in that time frame.

Developer Productivity in the Age of GenAI by Vic Iglesias, Netflix

9:30 AM > 9:50 AM (PST)

Talk details coming soon!

Universal Cache: It’s time for fast, reliable, and cheap CI pipelines by Etienne and Mirco , Gradle

10 AM > 11 AM (PST)

As generative AI continues to drive rapid innovation in software development, the volume of code, tests, and iterations. will only increase. This increased volume creates immense pressure on CI/CD pipelines, leading to longer build times, more load on infrastructure, and higher costs. Infrastructure teams face the growing challenge of managing increasingly complex build pipelines while still getting changes through at the speed of business. Optimizing build performance and reliability is no longer optional — it is critical to maintaining pipeline efficiency and developer productivity, and keeping infrastructure costs under control.

In this talk, Etienne Studer, SVP of Engineering at Gradle Inc. and Co-Founder of Develocity, will discuss how observability empowers organizations to pinpoint build performance bottlenecks and surface build toolchain unreliabilities. He will then introduce Universal Cache, a transformative feature designed to cache all critical aspects of a build — from configuration and dependencies to outputs – and applicable to all CI providers and multiple build systems.

Attendees will leave with a clear understanding of how observability can pinpoint performance and reliability issues, how Develocity accelerates CI/CD pipelines via Universal Cache, and how data enables organizations to validate the success of these optimizations and their business benefits.

JetBrains DevEco Survey: What Thousands of Developers Tell Us About Productivity in Their Companies by Olga and Yanina, JetBrains

11 AM > 12 PM (PST)

What happens when you ask several thousands developers and their managers around the world how they experience productivity and what developer experience really means to them? At JetBrains, we set out to find answers, not just about tools and metrics, but about the human side of developer productivity and experience. In this talk, we’ll share key insights from our Developer Ecosystem Survey, which now includes a dedicated section on DevEx and DP.

You’ll hear what developers and managers are really saying about the way companies are currently approaching DevEx and productivity: what helps, what hinders, and what they wish companies did differently. With a mix of research insights and practical takeaways, this talk is for anyone working to improve developer experience and productivity.

Lunch - on Patio/Common Areas

12 PM > 1 PM (PST)

Developer experience in the AI era by Abi and Nicole, DX

1 PM > 2 PM (PST)

Join Nicole Forsgren and Abi Noda for a fireside conversation about on their upcoming book, Frictionless (Aug ’25 release). Learn about the biggest lessons from the book including in-depth strategies for spearheading DevEx programs, measuring developer experience, and how AI is altering the landscape.

Attendees will receive exclusive early access to the book.

“Programming” GenAI into Developer Productivity by Eric and Jasmine, Netflix

2 PM > 3 PM (PST)

Join Eric Wendelin and Jasmine Robinson from Netflix to go beyond the hype. In this session, they will walk you through the real-world challenges and strategic decisions behind implementing GenAI for developer productivity. You’ll hear about their experiences with build vs. buy, what they’ve learned from piloting AI coding agents, and how they are fostering a culture of AI innovation through a dedicated champions community. Learn how Netflix is tackling the critical task of prioritizing what matters most for developer productivity in the rapidly evolving AI landscape.

Measuring Engineering Productivity from a Standing Start: A 330-Year-Old Bank’s Journey by Hilary and Tom, Lloyds Bank

3 PM > 4 PM (PST)

How do you begin measuring developer productivity in an organization with centuries of legacy, fragmented engineering practices, and ambitious FinTech aspirations? Join Hilary Lanham and Tom Kelk as they share the journey of introducing Engineering Productivity measurement at Lloyds Banking Group—Britain’s largest bank, now aiming to become the UK’s leading FinTech.

In this session, you’ll hear how Lloyds is tackling the challenge of aligning diverse engineering teams post-merger, navigating cultural and technical friction, and building a data-informed productivity strategy from scratch. Learn how they approached:

- Building a developer productivity strategy from the ground up in a legacy-rich environment

- Combining qualitative and quantitative insights to shape engineering outcomes

- Applying a product mindset to drive adoption, engagement, and continuous improvement

- Turning complex data into meaningful action for teams and stakeholders

- Whether you’re starting your own productivity journey or scaling an existing one, this session offers practical lessons and strategic thinking from one of the most complex environments imaginable.

Lightning Talk: Gradle: Your Build, Your Rules by Aurimas Liutikas, Google

4 PM > 4:20 PM (PST)

As your Gradle project gets bigger, it can get harder to make sure everything is built in exactly the way you want it. This talk will be a discussion about building your own Gradle plugin that wraps all other plugins exposing the bare minimum number of knobs to make all subprojects workable. We’ll discuss challenges, creative solutions, inter-plugin cooperation (e.g. Kotlin Gradle Plugin). You’ll hear about tools to ensure your developers stay on the well lit path. A lot of the inspiration for this comes from the experience with the AndroidX library build.

Lightning Talk: Your Toolchain is Production: The Case for Observability by Brian Demers, Gradle

4:20 PM > 4:40 PM (PST)

Let’s face it: your developer toolchain—everything from your local build to your CI/CD pipelines—is a production system. When it goes down, so does your team’s productivity and your ability to ship software. Yet, we often treat it as an afterthought, ignoring the flaky tests, slow builds, and other bottlenecks that hold us back.

In this talk, we’ll discuss why treating your toolchain as a critical production system is the key to unlocking better software delivery. We’ll explore how to apply observability practices—using logs, metrics, and traces—to diagnose problems, optimize performance, and improve the developer experience. We’ll also touch on how a well-observed toolchain can simplify governance, risk management, and compliance (GRC). We’ll share practical strategies for getting started and show you how a data-driven approach to your toolchain can dramatically increase your team’s efficiency, reliability, and security.

Lightning Talk: Shift Left, Not Off a Cliff: Making Early Testing Actually Work by Vishruth Ashok, CloudKitchens

4:40 PM > 5 PM (PST)

“Shift left” has become a mantra in modern software delivery—but in practice, it’s often just a fancy way of saying “give developers more testing responsibilities with less support.” The result? Bloated pipelines, brittle tests, frustrated engineers, and bugs that still sneak through.

In this talk, we’ll unpack why early testing initiatives so often fail to deliver real value, and what it actually takes to make them work at scale. We’ll explore the pitfalls of prematurely complex test suites, the myth of perfect unit test coverage, and the hidden costs of bad feedback loops. More importantly, we’ll show how to build a pragmatic, developer-friendly testing strategy that shifts quality left without falling off a cliff.

Expect practical tips on test pyramid design, test impact analysis, developer tooling, and how to align QA, dev, and platform teams around meaningful quality goals.

Key Takeaways:

Why most shift-left efforts fail (and how to avoid it)

How to design early-stage tests that catch issues without slowing teams down

Balancing test depth with speed and reliability

Building shared ownership of quality without overwhelming developers

Happy Hour - on Patio/Common Areas

5 PM > 6:30 PM (PST)

Breakfast - on Patio/Common Areas

8 AM > 10 AM (PST)

Operators, DeepSeek and the Future of AI and Productivity by Juan Marcano, Uber

10 AM > 11 AM (PST)

Traditional script-based testing, like those using Appium, often struggles with frequent UI changes, causing tests to break and requiring engineers to spend 30-40% of their time fixing them rather than developing features. At Uber’s scale—operating in thousands of cities, supporting 55 languages, and running countless experiments—manual testing is infeasible. These limitations make automated testing a necessity, but legacy methods are too brittle to handle the dynamic nature of modern apps.

DragonCrawl addresses this challenge by leveraging generative AI to create tests that adapt to UI changes rather than breaking. Once trained, it can be deployed across all Uber applications without frequent retraining, significantly reducing maintenance overhead. Despite its capabilities, DragonCrawl is highly efficient, requiring only a fraction of the computational resources of large models like ChatGPT, making it cost-effective to run at scale. It works by extracting view hierarchies, determining possible actions, and selecting the best one while dynamically adapting to unexpected pop-ups or variations in UI. By separating execution and validation, it ensures reliable results while minimizing test fragility.

AI-powered assertions further enhance test reliability, moving beyond brittle text or ID checks. Instead, testers can ask high-level questions like “Is there an ad on this screen?” or “Is alcohol visible?” using a generalized visual question-answering (VQA) framework. DragonCrawl’s impact is already significant, catching over 30 critical bugs (each potentially saving Uber millions), resolving 100+ localization issues, and even aiding in language rollouts like Pashto and Dari for Afghan refugees. Additionally, OmegaCrawl is transforming Uber’s internal workflows by automating repetitive operational tasks for developers, reducing inefficiencies.

More broadly, the evolution of AI in testing and development reflects a larger trend in AI research—balancing supervised learning (SL) and reinforcement learning (RL). Early AI models relied heavily on SL, which became too rigid, leading to the rise of RL for adaptability. However, RL alone often results in AI exploiting reward functions, requiring human intervention. The field is now shifting toward a hybrid approach, using SL for structure, RL for adaptability, and operator-driven AI for real-world grounding. The future of AI in software testing and beyond lies in this balance, where models can learn, adapt, and execute tasks while staying aligned with real-world constraints.

Maven Turbo reactor by Sergey Chernov, Miro

11 AM > 12 PM (PST)

Let’s take a look how Maven builds multi-module projects and why it can be slow. There are few ways to revisit standard behavior and efficiently utilize multi-core CPUs. Good news is that it even does not require forking the Maven, we can do it with custom Maven extension.

Slides: https://miro.com/app/board/uXjVLYUPRas=/

Project: https://github.com/seregamorph/maven-turbo-reactor

Lunch - on Patio/Common Areas

12 PM > 1 PM (PST)

Information to Inspiration: Morgan Stanley’s Data-Driven Approach to Developer Productivity by Khalid and Patrick, Morgan Stanley

1 PM > 2 PM (PST)

When Morgan Stanley took on its DevOps transformation, we knew we wanted to use data to drive the adoption of developer best practices. Join us to learn more about how Almanac, our patented warehouse and badge solution pushed information to developers and helped transform their day-to-day activities, promoting both productivity improvements and the paydown of technical debt

Testing on Thin Ice: Chipping Away at Test Unpredictability by Brian Demers, Gradle and François Martin, Karakun AG

2 PM > 3 PM (PST)

Ever tried to catch melting snowflakes? That’s the challenge of dealing with flaky tests – those annoying, unpredictable tests that fail randomly and pass when rerun. In this talk, we’ll slide down the slippery slope of why flaky tests are more than just a nuisance. They’re time-sinks, morale crushers, and silent code quality killers.

We’ll skate across real-life scenarios to understand how flaky tests can freeze your development in its tracks, and why sweeping them under the rug is like ignoring a crack in the ice. From delayed releases to lurking bugs, the stakes are high, and the costs are real.

But don’t pack your parkas just yet! We’re here to share expert strategies and insights on how to identify, analyze, and ultimately melt away these flaky tests. Through our combined experience, we’ll provide actionable techniques and tools to make sure snow is the only flakiness you experience, ensuring a smoother, more reliable journey in software development.

Reimagining CI/CD with Agentic AI: The Future of Platform Engineering in Financial Institutions by Bishwajeet, Subha and Mani, JPMorgan Chase

3 PM > 4 PM (PST)

In highly regulated environments like financial institutions, Platform Engineering needs more than automation—it needs intelligence, resilience, and compliance by design.

Redefining a next-gen Agentic CI/CD platform powered by LangGraph, LangChain, and GPT Agents that:

- Orchestrates intelligent pipeline stages

- Performs test optimization and root-cause analysis

- Automates security scans and evidence generation

- Enforces airtight compliance with secure, auditable workflows

This isn’t just Platform Engineering—it’s Autonomous Software Delivery.

Lightning Talk: The Need for Speed: Streamlining CI/CD for Rapid App Releases by Hassan and Clinton, Capital One

4 PM > 4:20 PM (PST)

This session will cover strategies and techniques used to significantly reduce CI merge times and accelerate release frequency. Learn how we implemented CI/CD improvements to streamline deployments and boost release cadence.

Lightning Talk: Merge Queue at Uber Scale by Dhruva Juloori, Uber

4:20 PM > 4:40 PM (PST)

At Uber’s scale, guaranteeing an always-green mainline while processing hundreds of changes per hour across diverse monorepos, each supporting tens of business-critical apps, presents significant challenges, such as growing queues of pending changes, frequent conflicts, build failures, and unacceptable land times. These bottlenecks slow down development, negatively impact developer productivity and sentiment, and make Continuous Integration of changes increasingly difficult. In this talk, I’ll explore the design and evolution of MergeQueue—Uber’s innovative CI scheduling system that ensures mainline stability and production readiness for every commit. By harnessing speculative validation, build-time and change success predictions, and advanced conflict resolution strategies, MergeQueue delivers unmatched efficiency. It has reduced CI resource usage by approximately 53%, CPU usage by 44%, and P95 waiting times by 37%, significantly improving build reliability and developer velocity across Uber’s engineering ecosystem. These optimizations not only eliminate CI bottlenecks but also enable thousands of engineers to ship code rapidly and reliably, ensuring every commit is production-ready.

Key Learnings:

- Scaling CI for High-Commit Velocity – Learn how to design and manage CI systems that process hundreds or even thousands of changes per hour across monorepos while ensuring a green mainline and production-ready commits.

- Optimizing CI Efficiency – Understand how speculative validation, build-time predictions, and change success predictions significantly reduce CI resource usage, CPU consumption, and waiting times.

- Conflict Resolution in CI – Discover strategies for handling frequent conflicts, build failures, and long land times, ensuring smooth and efficient Continuous Integration at scale.

- Best Practices for Scalable Developer Productivity – Gain actionable insights on designing and implementing CI/CD systems that minimize bottlenecks, improve developer experience, and maximize efficiency in large-scale engineering environments.

Happy Hour - on Patio/Common Areas

6 PM > 8 PM (PST)

Breakfast - on Patio/Common Areas

7:45 AM > 10 AM (PST)

Agentic coding at Airbnb by Szczepan and Mike, Airbnb

10 AM > 11 AM (PST)

It’s amazing how we can now build working apps just by few-shot prompting LLMs. But try doing this with monorepos of 10s of MLOC, like the ones used for planet-scale apps that must be secure and compliant. Responsible agentic coding at scale, where 1000s of engineers materialize code changes by engaging in deep sessions with AI is really challenging. We want to share Airbnb’s journey, the trade-offs we picked, learnings, outcomes and productivity impact we observed.

AutoMobile by Jason Pearson, Zillow

11 AM > 12 PM (PST)

Asking AI agents to one-shot apps and hoping their models have enough training to generate the right code was a temporary phase. AutoMobile brings the exact platform specific context for mobile engineers to explore their apps and source code simultaneously while modernizing how we test them.

AutoMobile is a comprehensive set of tools including an MCP that enables AI agents to interact with mobile devices and comes with automated test authoring, AI supported test recovery and drop-in support for test execution. I’ll be walking through some of the development journey and system design with live demos to illustrate the capabilities along the way. I’d like to show you what all of this can do and how tools like this can change the perspective on what’s possible.

Lunch - on Patio/Common Areas

12 PM > 1 PM (PST)

From 25 Minutes to 2.5: Okta’s Journey to Faster Builds by Anugrah and Jason, Okta

1 PM > 2 PM (PST)

Tired of glacial Maven build times? We were, too. Slashing build times in a large 1400 module project is a deliberate journey. This session reveals our multi-year effort to optimize our developer experience. This session isn’t just about the destination; it’s about the critical milestones and unexpected hurdles we encountered along the way.

We’ll share how we used build performance insights to identify and fix critical bottlenecks, navigated challenges during a major Java upgrade, adapted to the performance characteristics of new developer hardware, and implemented caching mechanisms to achieve dramatically faster feedback loops. Learn practical strategies and key considerations for significantly improving your build speeds and boosting developer productivity, regardless of your specific tech stack.

Debug Less, Deploy More: Intuit’s CI/CD Productivity Evolution by Katie and Vijay, Intuit

2 PM > 3 PM (PST)

At Intuit, we’re helping developers move faster and smarter by transforming how we build, test, and deploy software. It starts with GenAI-powered failure analysis that reduces time-to-diagnosis from hours to minutes, and continues with a shift toward automated promotions across environments, GitHub Actions, and Argo-powered progressive delivery with built-in auto rollbacks.

By reducing friction in debugging and improving deployment confidence, we’ve already made meaningful gains in developer efficiency. Now, we’re leaning into a bold future—aiming to double deployment frequency and slash lead time for change by simplifying CI and enhancing the intelligence of our promotion pipelines.

This talk walks through the evolution of our CI/CD platform, the developer pain points we tackled, the cultural shifts that made it all stick, and the future ideal state of our platform. If you’re scaling Developer Experience in a complex org, you’ll walk away with actionable insights—proven strategies your engineers will thank you for!

Crossing the communication chasm to drive targeted productivity improvements by Rebecca Fitzhugh, Atlassian

3 PM > 4 PM (PST)

Atlassian platform teams are responsible for driving cross-cutting engineering efforts to increase developer productivity and uplift engineering health. This means those teams working on developer productivity have a unique reliance on other teams to ensure the adoption of their platform capabilities – getting code changes into other teams’ codebases is critical to their success.

However, we heard through our quarterly DevProd survey that there was an organizational chasm between the product and platform teams, resulting in the product teams being inundated with many requests that often came in sideways without clarity on the actual ask from the platform teams. To be effective in improving developer productivity, we needed to become more effective in communicating with engineering teams across the org and give them clear visibility into upcoming priorities so they can plan ahead.

Join us to learn how Atlassian bridged the gap between platform and product teams by using campaigns as the unit of change to effectively scope, target, and communicate changes across hundreds of engineering teams and thousands of services to sustainably drive productivity improvements.

Lightning Talk: How to be quick without being dirty: maintaining quality at high velocity by Benjie, Shipyard and Erin, Quotient

4 PM > 4:20 PM (PST)

When it comes to software quality, moving faster doesn’t necessarily mean you’re in a better place, especially when you don’t have the right pipelines or processes set up. Eventually, the bugs resulting from shortcuts or poor practices outweigh the benefits of shipping fast. What are some indicators that you might be moving too fast? And how can you keep a good pace while being confident your code is stable and error-free?

When you’ve got proper safeguards in place (a good warning system, sufficient test coverage, and strong CI/CD), you can spend more time thinking about coding and less about risk. Where do you start when building out these systems? And until you have your testing and infra in place, how can you supplement that with manual QA and UAT?

You don’t always have time to implement the perfect architecture or the best-practice solution. However, once you know what matters in a dev decision, you can implement one that is almost as good in a fraction of the time.

In this talk, Erin and Benjie will talk about how to make quick, smart decisions during development so that you can move fast and not break things. They’ll discuss how you can de-risk processes so that devs don’t have to supervise every new deployment. And they’ll talk about how you can deconstruct workflows to promote developer productivity holistically.

Lightning Talk: Developer Velocity in the AI Era: The Databricks Way by Akshat and Janusz, Databricks

4:20 PM > 4:40 PM (PST)

At Databricks, we’ve been on a journey to transform the developer experience from the ground up in the age of AI. In this session, we will share how our Developer Platform org is building systems that scale as our engineering scales with AI — from remote build pods that untether productivity from local machines, to CI/CD pipelines that support complex open-source and monorepo workflows, to next-gen testing infrastructure — strategic use of AI tooling and agents across the board.

You’ll hear lessons learned, trade-offs we’ve navigated, and the key principles guiding our work. This talk will offer a blueprint for building high-leverage systems that let engineers ship faster, with more confidence in the age of AI.

Lightning Talk: Speed, Flow, and Metrics: Mastering the Developer Productivity Paradox by Anjali Gugle, Cisco Systems

4:40 PM > 5 PM (PST)

In the race to accelerate software delivery, organizations often focus heavily on metrics, dashboards, and automation. But are we measuring the right things? Are we truly optimizing for meaningful productivity, or simply encouraging activity? Developer experience (DX) goes beyond just delivering software faster—it’s about reducing friction, ensuring quality, and creating an environment where developers can innovate with confidence.

This session will examine the hidden trade-offs in developer productivity and introduce practical strategies for optimizing workflows. We’ll explore methods to measure and reduce bottlenecks such as approval times, review cycles, and resource utilization, all while maintaining high-quality standards. Additionally, we’ll discuss how AI-powered tools can transform productivity by simplifying documentation, elevating architecture, and accelerating software quality through gating and synthetic testing.

A key focus will be Productivity Metrics and Measurement—how organizations can assess and optimize developer satisfaction, rather than just tracking activity. We will share insights on how measuring developer satisfaction directly correlates with improved productivity and business outcomes, helping create a developer-first culture.

Through a real-world case study, we’ll highlight how adopting advanced productivity strategies and AI-driven workflows helped a large organization reduce friction, accelerate delivery, and improve developer satisfaction.

Join us as we explore how to build a developer-first culture, where developers can work faster, smarter, and more joyfully—ultimately driving both innovation and business success.

Closing Ceremony

5 PM > 5:15 PM (PST)

Happy Hour - on Patio/Common Areas

5:15 PM > 6:30 PM (PST)