DragonCrawl: Shifting Mobile Testing to the Left with AI

The Developer Platform team at Uber is consistently developing new and innovative ideas to enhance the developer experience and strengthen the quality of our apps. Quality and testing go hand in hand, and in 2023, we took on a new and exciting challenge: to change how we test our mobile applications, with a focus on machine learning (ML). Specifically, we are training models to test our applications just like real humans would.

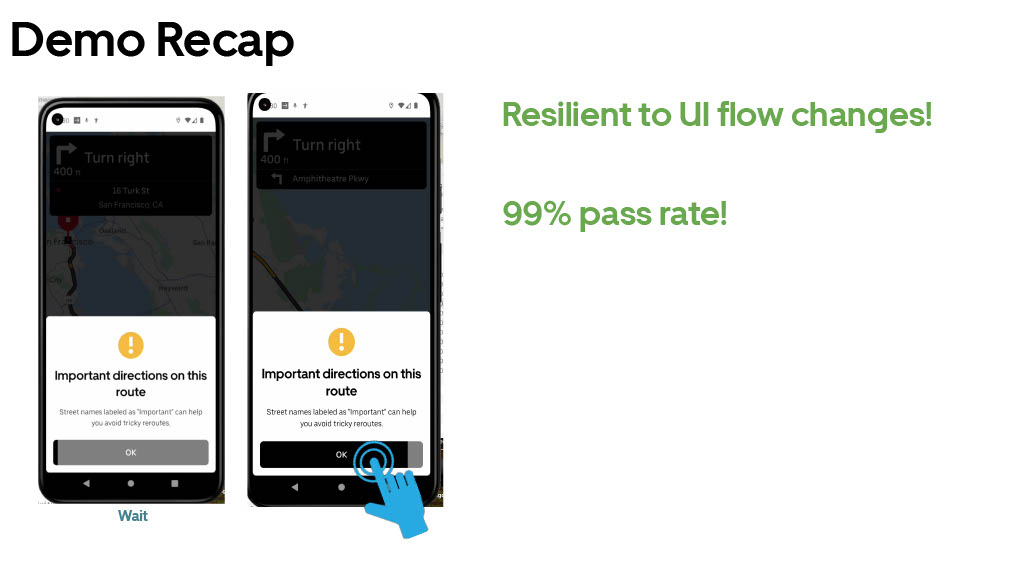

Mobile testing remains an unresolved challenge, especially at our scale, encompassing thousands of developers and over 3,000 simultaneous experiments. Manual testing is usually carried out but with high overhead and cannot be done extensively for every minor code alteration. While test scripts can offer better scalability, they are also not immune to frequent disruptions caused by minor updates, such as new pop-ups and changes in buttons. All of these changes, no matter how minor, require recurring manual updates to the test scripts.

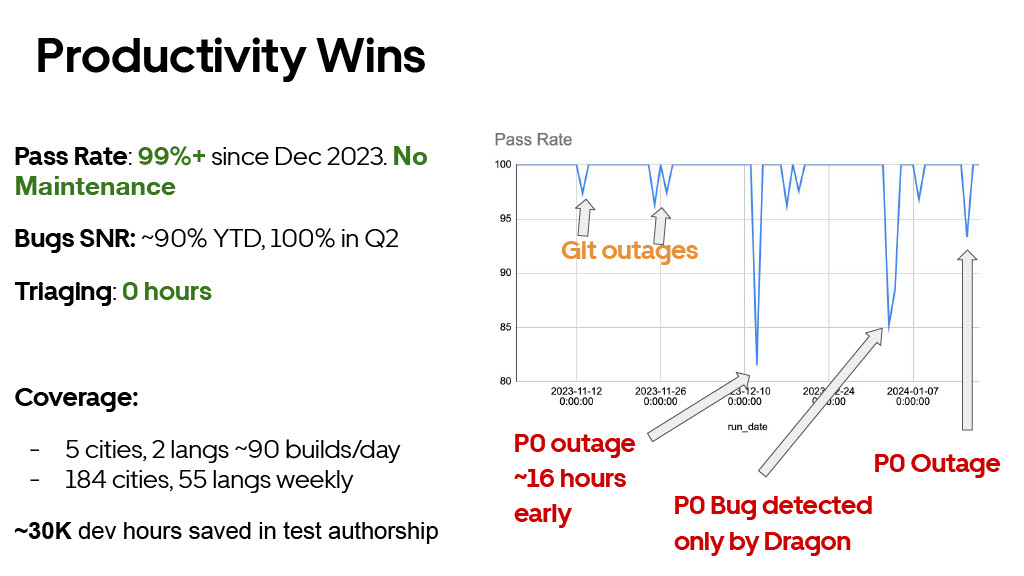

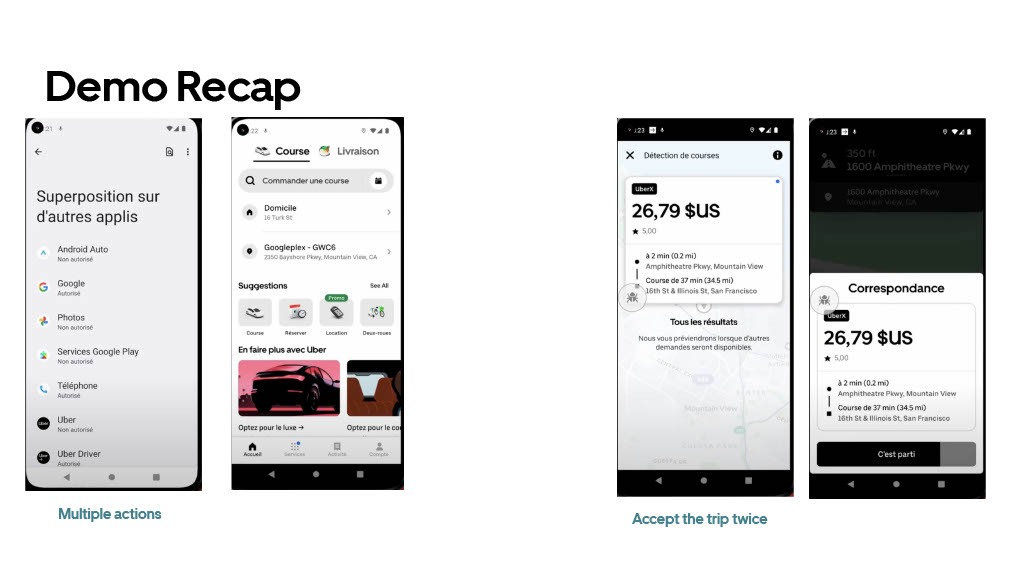

Consequently, engineers working on this invest 30-40% of their time on maintenance. Furthermore, the substantial maintenance costs of these tests significantly hinder their adaptability and reusability across diverse cities and languages (imagine having to hire manual testers or mobile engineers for the 50+ languages that we operate in!), which makes it really difficult for us to efficiently scale testing and ensure Uber operates with high quality globally.

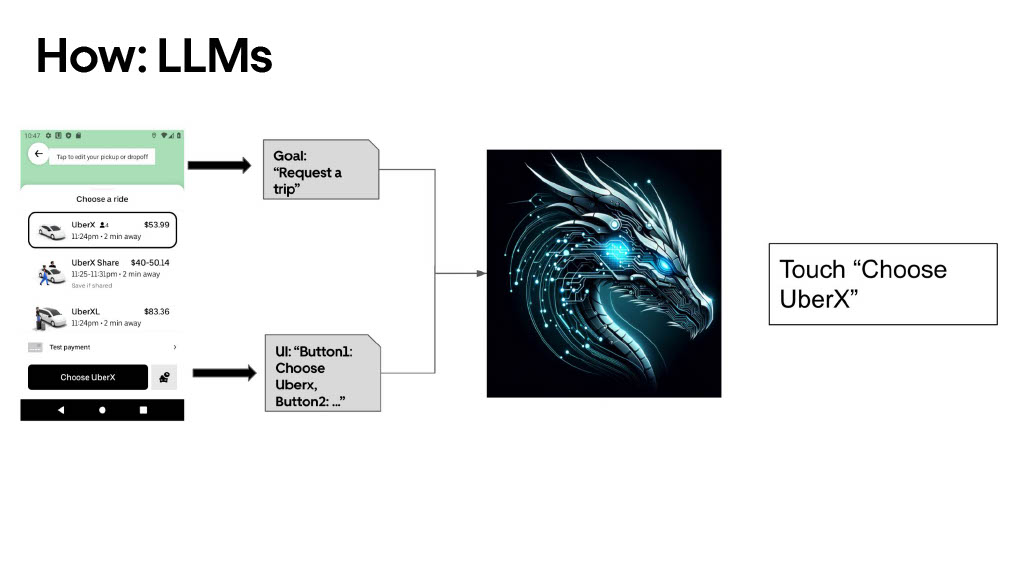

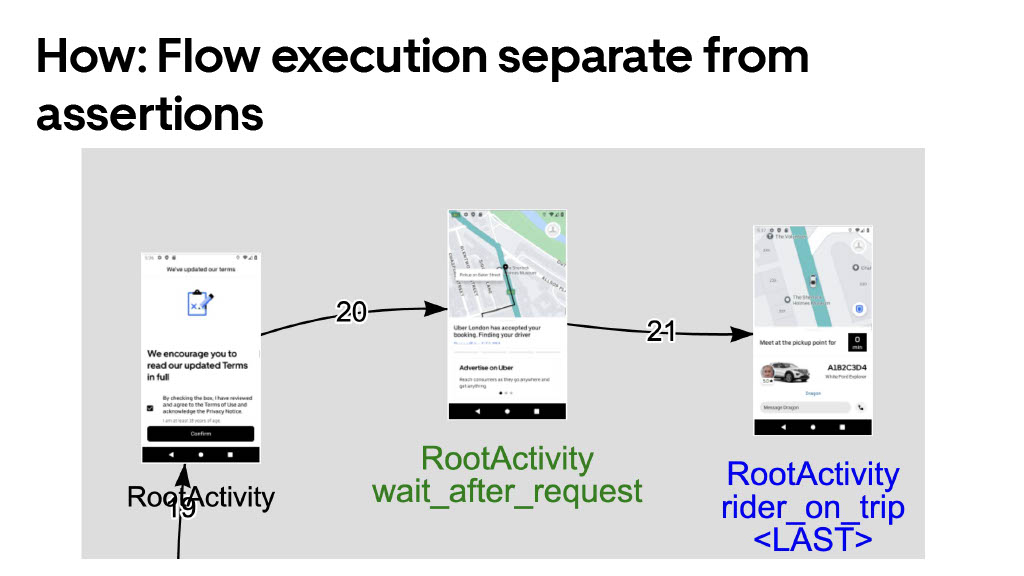

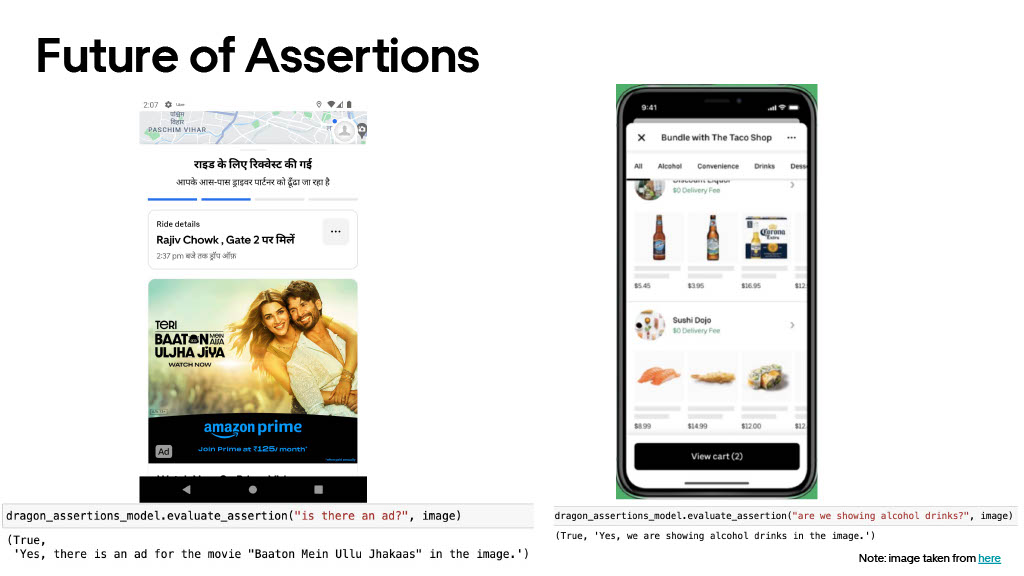

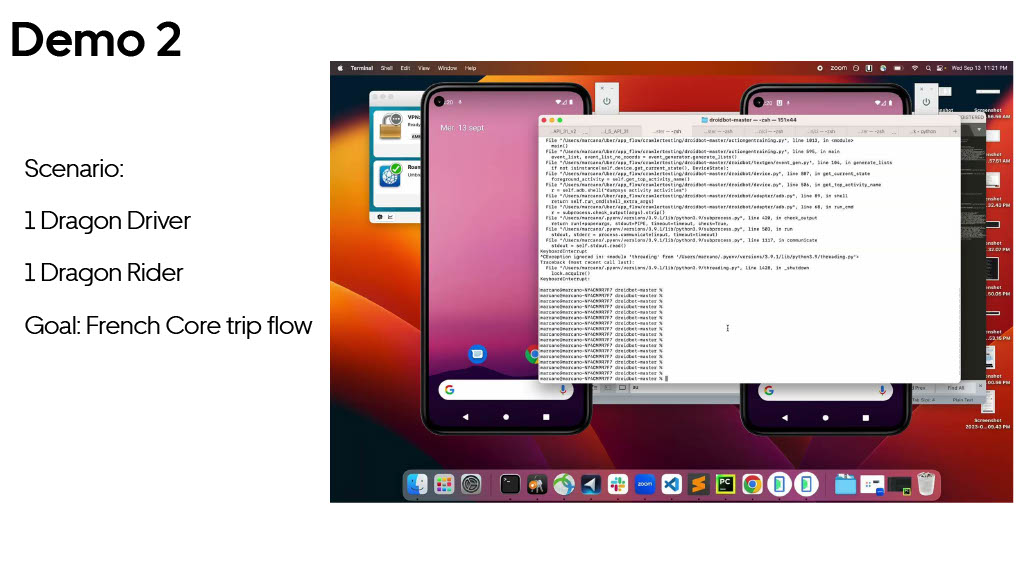

To solve these problems, we created DragonCrawl, a system that uses large language models (LLMs) to execute mobile tests with the intuition of a human. It decides what actions to take based on the screen it sees and its goals, and independently adapts to UI changes, just like a real human would.

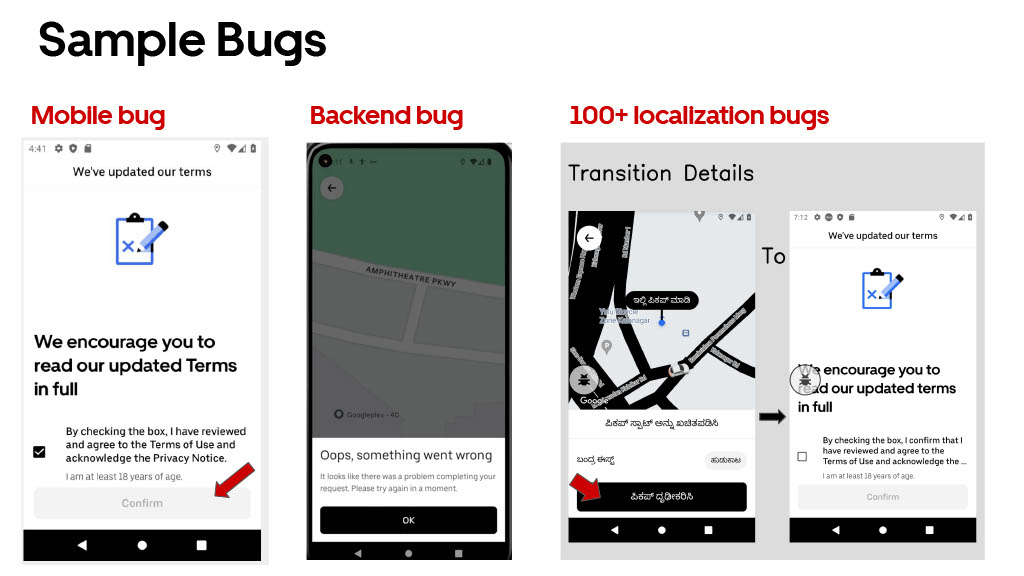

Of course, new innovations also come with new bugs, challenges, and setbacks, but it was worth it. We did not give up on our mission to bring code-free testing to the Uber apps, and towards the end of 2023, we launched DragonCrawl. Since then, we have been testing some of our most important flows with high stability, across different cities and languages, and without having to maintain them. Scaling mobile testing and ensuring quality across so many languages and cities went from humanly impossible to possible with the help of DragonCrawl. In the three months since launching DragonCrawl, we blocked ten high-priority bugs from impacting customers while saving thousands of developer hours and reducing test maintenance costs.

In this talk, we will deep dive into our architecture, challenges, and results. We will close by touching a little on what is in store for DragonCrawl.